Customer/Developer Point of View

“Once upon a time there was an SAP Analytics Cloud (SAC) analytics model (based on an external-SAP Hana-view) published in SAP Analytics Cloud. And it was doing great. It grew and grew and grew, till the MDS (1) statement was so big it crashed every time someone wanted to execute the statement. The master story (and all other stories) with over 200 graphs and tables based on this model in SAC were doing great performance-wise until performance broke down. And it took over 2 min to finish loading the first page with more than 100 graphs, tables, and other widgets. And there our story begins … “

In general, if you want to create a story from a management (and key-user) point of view, you want to show as many graphs, tables data as possible. You want to have a nice dashboard with all the information available. But be aware, the more graphs you have on one page the more time it takes loading the page and the story overall afterwards. Performance suffers a great deal.

For example: Large companies with a variety of products, pages with up to 120 graphs are a reality. Think about what you really want to see prior of creating your story and possibly model and instead use for example a content page and link other smaller stories to that page or create only story pages with not more than 10 graphs on one page. Even splitting a page with 20 graphs and a table into only one page of graphs and one with the table can improve your performance by 20%.

(Recommendation from SAP are 8 to 10 graphs and with a low number of data points – well for larger companies this is sometimes a real problem)

If you can filter lots of things within the external SAP HANA View underneath, fewer data is processed in the end. Also, if possible, create all your restricted and calculated key figures within the query underneath and not in the model in SAC.

Also, from the model point of view. Large models with many dimensions and measures tend to devour performance. Leaner models perform way better! This to say if it is possible. It is also not feasible to create for every story a new model. Testing effort is higher and can lead to frustration on business/testing side.

Issues and Best Practices

- Blending with xls-File

If you have a SAP HANA Live Data Model and blend for example an xls file for a specific measure in your story and put out in a bar chart with filter on specific dimensions, it could take up to 1 min to load. If possible, try to bring data from xls file into your main story model to avoid blending for better performance.

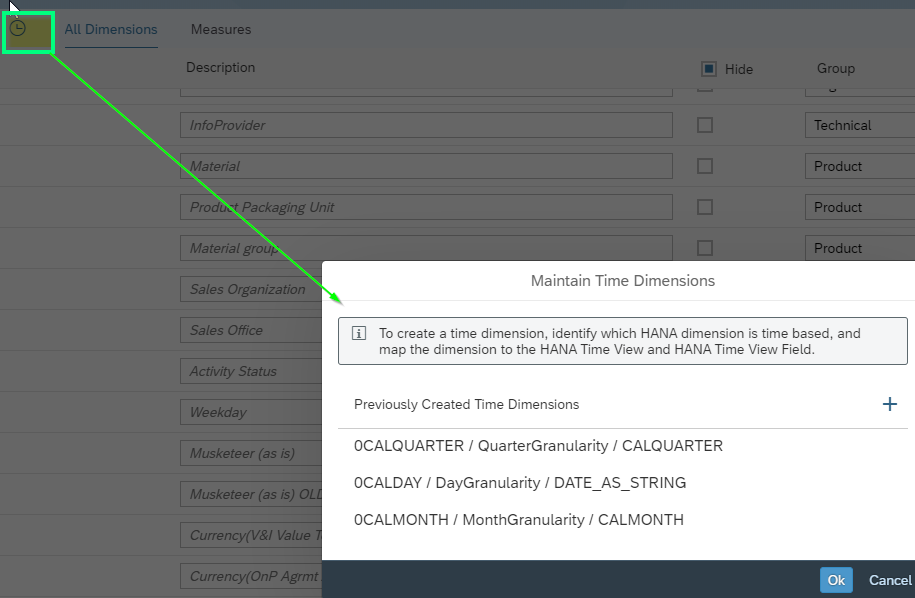

- 0CALDAY Hierarchy activated

SAP BW 0CALDAY Hierarchy activated in underlying source system lead to very high idle time – for an initial story there was the hierarchy for 0CALDAY activated. Idle time in Chrome for loading the story was very high. We reduced this by up to 30% alone with de-activating this specific hierarchy. Why? Because in the beginning all the different “under”-hierarchies are set-up. For 0CALDAY this was not only 0CALDAY but also 0CALYEAR, 0CALWEEK etc. If you need time and range for the 0CAL-dimensions use the time dimensions available within SAC model.

- Use Unrestricted Drilling

If you have a filter within a graph or a table on a time dimension, activate unrestricted drilling. It will enhance the graph’s performance.

- Exception Aggregations

Recommendation from SAP (2): Avoid specifying Exception Aggregations in the Model and instead, use the Restricted Measures or Calculation functionality in your stories.

(*Source https://www.sapanalytics.cloud/resources-performance-best-practices/ )

Best Practices from SAP is not feasible for companies who have measures which are counted over all customers or want to show only last value on a specific dimension. You will have performance issues when using exception aggregations over a dimension with many datapoints. If possible do not use but if needed you must count on a weaker performance. Unfortunately, this is reality and not changeable at the moment.

- Show unbooked data in a chart

Recommendation from SAP (2): Whenever possible, choose to show unbooked data in a chart. This means that the software must spend less time differentiating between booked and unbooked data.

(*Source https://www.sapanalytics.cloud/resources-performance-best-practices/ )

This Best Practice is also not feasible for many entry points. If for example you have thousands of customers but only for a few 100 certain data is booked on a dimension, this will show you a lot of empty rows in tables or in some charts. Do not use tables for unbooked data. This is a pain in scrolling since it will show all customers on this hierarchy with unbooked data for a certain dimension. For charts it is a possibility depending on what you filter and how you create the chart etc.

- Not-used Measures and Dimensions

If you already have a bigger model set-up, go through all the measures and dimensions. Delete the unnecessary ones, this will also increase performance even if you do not use them in your story. Leaner models perform better!

- Number of graphs max

SAP recommends to not use more than 8-10 charts per page – but if you have many more on one page think about restructuring and what your end-users will most definitely need.

Chrome can process 6 events in parallel -> try to keep as little elements on a page as possible (e.g. if you have 18 charts on one-page chrome will request data for the first 6 charts initially. Every time one chart is loaded data for an additional chart can be requested. This adds up.)

Expert Tipp for Chrome: when opening a large story page zoom out to for example to 25% – Chrome can load more events parallel and so opening the story is much faster.

Conclusion

After evaluating and measuring performance issues for the initial story, we deleted unnecessary measures, de-activated the hierarchy on dimension 0CALDAY as well as eliminated the blended xls and replaced it with data uploaded to the datamodel.

In addition, we activated for all graphs and tables, using a filter on a time-dimension, the unrestricted drilling option. With these changes, we enhanced the performance for example for a story page with over 100 graphs initial loading from 2:40min to 60 seconds.

What we learnt, that sometimes it is not possible to follow all the recommendations especially if you have huge data sets, but it is necessary to constantly check for, and if possible implement, new features for SAC to enhance performance and usability.

Also, the support from the SAP consultants is necessary for evaluating and measuring models, stories, and widgets in the backend to find solutions. There are analysing tools and programs most helpful to evaluate where performance issues are possibly coming from. There is only so far, you can go analysing within frontend. For bigger projects, a co-operation between front-end and back-end is essential for the output.

Although, SAC is still in its infancy and there is constantly updates to improve performance.

A clear concept of what you need and want from the story and model in the beginning is crucial and most helpful in the long run.

Footnotes

1 https://launchpad.support.sap.com/#/notes/2670064

2 https://www.sapanalytics.cloud/resources-performance-best-practices/

Links for more information

https://apps.support.sap.com/sap/support/knowledge/public/en/2511489

Interesting how-to measure performance in google chrome: http://test.zpartner.at/how-to-trace-widgets-in-sap-analytics-cloud-stories-connected-via-hana-live-data-connection/

If you want to activate a hierarchy for SAC usage: http://test.zpartner.at/how-to-activate-master-data-hierarchies-for-sap-analytics-cloud-sac/